How to protect yourself from deepfake videos: real tips and smart tech

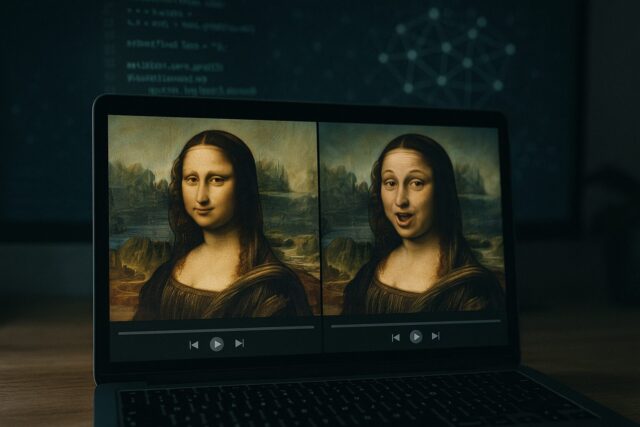

Artificial intelligence is doing some amazing things—helping doctors diagnose faster, making our devices smarter, even writing stories like this one. But it also has a darker side. One of the most alarming developments in recent years is deepfake technology—realistic but fake videos and audio created with AI. These fakes can be incredibly convincing, spreading false information, damaging reputations, and making us question what’s real online.

So, how can you spot a deepfake? And more importantly, how can you protect yourself? This guide covers everything you need to know—how deepfakes work, why they’re dangerous, how to detect them, and what tools and habits can help you stay safe in an age of digital deception.

What exactly is a deepfake?

The term “deepfake” blends “deep learning” (a type of artificial intelligence) and “fake.” Basically, it refers to synthetic videos or audio clips created using advanced AI algorithms—usually ones called GANs (Generative Adversarial Networks). These fakes can swap someone’s face onto another body, mimic voices, and even generate entire speeches or scenes that never happened.

While deepfakes were originally used in Hollywood for special effects, they’ve found a darker purpose: creating false political statements, fake celebrity scandals, and even impersonating CEOs to steal money.

How are deepfakes made?

Creating a high-quality deepfake isn’t as simple as pressing a button, but it’s getting easier every year. Here’s a simplified look at how it works:

-

Step 1: Collect lots of data

Videos, images, and audio clips of the target person are gathered—usually from social media, interviews, and public appearances. -

Step 2: Train the AI model

The deepfake software learns the target’s face, voice, gestures, and expressions using machine learning. -

Step 3: Merge and edit

The AI swaps the target’s face or voice onto someone else in a new video or audio clip. -

Step 4: Touch up

Lighting, lip sync, and sound are tweaked to make everything look natural.

The result? A video that might fool even the most careful viewer.

Why are deepfakes so dangerous?

They can spread disinformation

A deepfake can make it look like a politician said something shocking—or even declared war. That kind of fake news can cause chaos, especially if it spreads quickly on social media.

They can ruin lives

People have had their faces put into fake porn videos or videos of violent acts—then been blackmailed or publicly humiliated. The emotional damage can be severe.

They can be used for fraud

There have been real cases where scammers used deepfake voices of company executives to trick employees into transferring millions of dollars.

They break trust in what we see

If we can’t believe our eyes or ears anymore, how can we trust anything we see online? Deepfakes could make us all more skeptical—even of real, honest content.

How to spot a deepfake

While some deepfakes are nearly perfect, many still have telltale signs. Keep an eye (and ear) out for:

Visual red flags

-

Eyes that don’t blink naturally or look “dead”

-

Mismatched skin tones on the face and neck

-

Weird lip movements that don’t match the words

-

Glitches or distortions around the edges of the face

-

Shadows and lighting that seem off

Audio red flags

-

Strange pacing or robotic speech patterns

-

Audio that doesn’t sync with the video

-

Digital “glitches” or echo in the voice

Context clues

-

The person is saying something completely out of character

-

The source of the video is sketchy or unknown

-

It went viral overnight from a random website

If something feels off—it probably is.

Tech tools that can help

AI-powered detection tools

Big tech companies and researchers are building tools that analyze videos and detect signs of tampering.

-

Microsoft Video Authenticator: Scores how likely a video has been manipulated.

-

Deepware Scanner: A mobile app that flags suspicious content.

-

Meta (Facebook/Instagram): Uses internal tools to detect and label altered videos.

Blockchain and media fingerprinting

Some companies are using blockchain to log original versions of media files, so changes are easy to trace. Others are embedding invisible “fingerprints” in authentic content.

Content authenticity standards

Initiatives like the Content Authenticity Initiative (led by Adobe) aim to attach secure metadata to photos and videos—like where and when they were made, and whether they’ve been edited.

What you can do to protect yourself

Think before you share

-

Ask yourself: Is the source reliable? Is it from a reputable outlet?

-

Don’t spread a video just because it’s shocking or emotional—that’s how fake content thrives.

Limit your personal exposure

-

Avoid uploading too many selfies or videos to public platforms. Every image makes it easier for AI to mimic your face or voice.

-

Keep privacy settings tight on TikTok, Instagram, and YouTube.

Use detection apps

Apps like Sensity, Deepware, or Truepic can help analyze suspicious videos before you forward them to others.

Watermark your own content

If you post videos online, use subtle watermarks or metadata that help prove they’re authentic—and harder to reuse maliciously.

Be aware of emotional manipulation

Deepfakes often play on fear, anger, or shock. If something gets a strong reaction from you, pause and fact-check before sharing.

The legal side: is anything being done?

Yes—but it’s still a work in progress. Different countries are developing laws to tackle deepfake abuse:

-

EU: The Digital Services Act requires platforms to label altered content.

-

USA: Some states (like California) have banned political deepfakes near elections.

-

UK: The Online Safety Bill includes provisions against non-consensual deepfake porn.

Victims can sometimes sue for defamation, privacy violations, or emotional distress. But the law often lags behind the technology—so prevention is still your best defense.

What the future looks like

Deepfakes are getting better. Some can now be made in real time—during video calls or livestreams. That means it’s more important than ever to stay informed and cautious.

Here’s what needs to happen:

-

Smarter tech: AI that detects AI—with better accuracy and speed.

-

Stronger laws: Governments need to keep up and enforce accountability.

-

Smarter people: That’s you. Learning how to spot, stop, and report deepfakes is your superpower in the digital age.

The bottom line? We can’t stop deepfakes from existing. But we can make sure they don’t fool us—or hurt the people we care about.

Image(s) used in this article are either AI-generated or sourced from royalty-free platforms like Pixabay or Pexels.